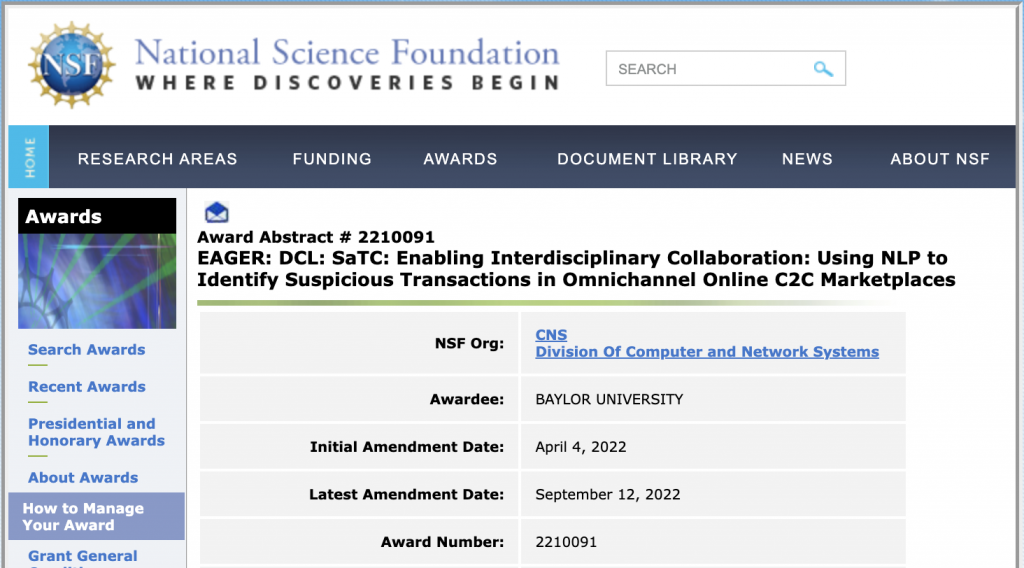

Baylor University has been awarded funding under the SaTC program for Enabling Interdisciplinary Collaboration; a grant led by Principal Investigator Dr. Pablo Rivas and an amazing group of multidisciplinary researchers formed by:

- Dr. Gissella Bichler from California State University San Bernardino, Center for Criminal Justice Research, School of Criminology and Criminal Justice.

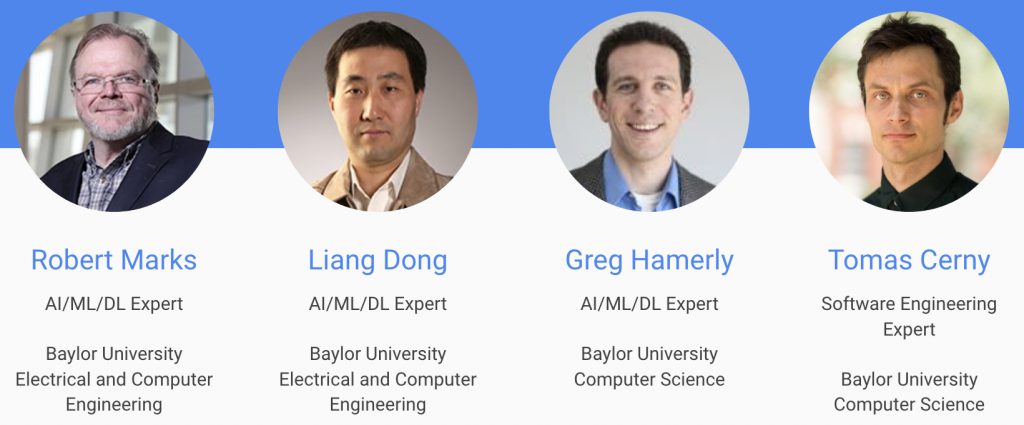

- Dr. Tomas Cerny is at Baylor University in the Computer Science Department, leading software engineering research.

- Dr. Laurie Giddens from the University of North Texas, a faculty member at the G. Brint Ryan College of Business.

- Dr. Stacy Petter is at Wake Forest University in the School of Business. She and Dr. Giddens have extensive research and funding in human trafficking research.

- Dr. Javier Turek, a Research Scientist in Machine Learning at Intel Labs, is our collaborator in matters related to machine learning for natural language processing.

We also have two Ph.D. students working on this project: Alejandro Rodriguez and Korn Sooksatra.

This project was motivated by the increasing pattern of people buying and selling goods and services directly from other people via online marketplaces. While many online marketplaces enable transactions among reputable buyers and sellers, some platforms are vulnerable to suspicious transactions. This project investigates whether it is possible to automate the detection of illegal goods or services within online marketplaces. First, the project team will analyze the text of online advertisements and marketplace policies to identify indicators of suspicious activity. Then, the team will adapt the findings to a specific context to locate stolen motor vehicle parts advertised via online marketplaces. Together, the work will lead to general ways to identify signals of illegal online sales that can be used to help people choose trustworthy marketplaces and avoid illicit actors. This project will also provide law enforcement agencies and online marketplaces with insights to gather evidence on illicit goods or services on those marketplaces.

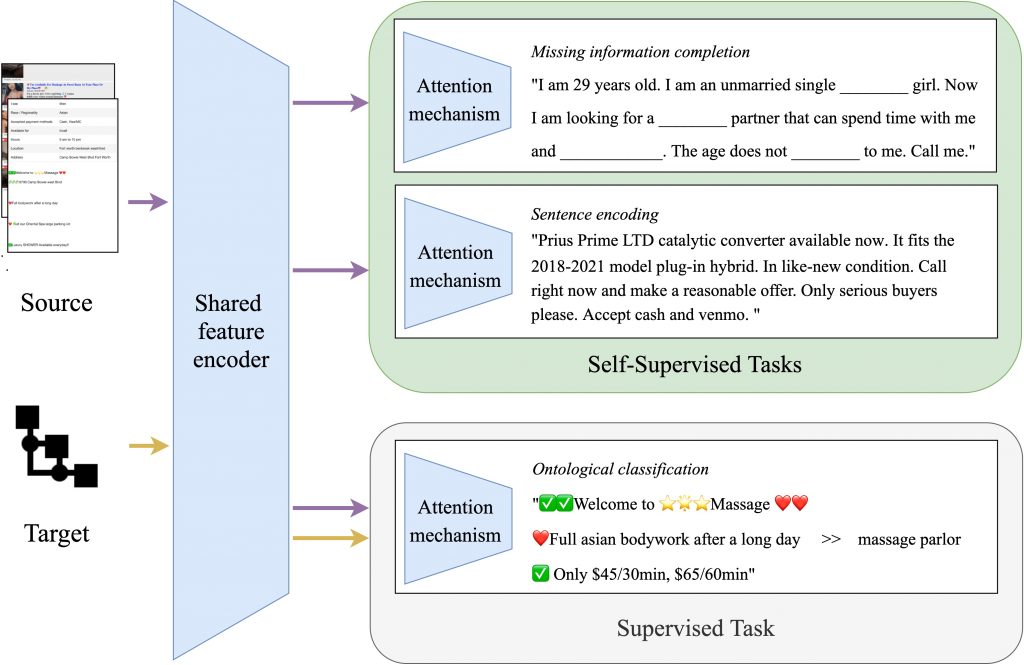

This research assesses the feasibility of modeling illegal activity in online consumer-to-consumer (C2C) platforms, using platform characteristics, seller profiles, and advertisements to prioritize investigations using actionable intelligence extracted from open-source information. The project is organized around three main steps. First, the research team will combine knowledge from computer science, criminology, and information systems to analyze online marketplace technology platform policies and identify platform features, policies, and terms of service that make platforms more vulnerable to criminal activity. Second, building on the understanding of platform vulnerabilities developed in the first step, the researchers will generate and train deep learning-based language models to detect illicit online commerce. Finally, to assess the generalizability of the identified markers, the investigators will apply the models to markets for motor vehicle parts, a licit marketplace that sometimes includes sellers offering stolen goods. This project establishes a cross-disciplinary partnership among a diverse group of researchers from different institutions and academic disciplines with collaborators from law enforcement and industry to develop practical, actionable insights.