In the rapidly evolving field of artificial intelligence, understanding the sensitivity of models to adversarial attacks is crucial. In our recent paper, Korn Sooksatra introduces the Sensitivity-inspired constrained evaluation method (SICEM) to address this concern.

Sooksatra, K., Rivas, P. Evaluation of adversarial attacks sensitivity of classifiers with occluded input data. Neural Comput & Applic 34, 17615–17632 (2022). https://doi.org/10.1007/s00521-022-07387-y

Understanding SICEM

Our proposed method, SICEM, evaluates the vulnerability of an incomplete input against an adversarial attack in comparison to a complete one. This is achieved by leveraging the Jacobian matrix concept. The sensitivity of the target classifier’s output to each attribute of the input is calculated, providing a comprehensive understanding of how changes in the input can affect the output.

![Rendered by QuickLaTeX.com \[ s(x,y)_i = \left|\min \left(0, \frac{\partial Z(x)_y}{\partial x_i} \cdot \left(\sum_{y^{'} \neq y} \frac{\partial Z(x)_{y^{'}}}{\partial x_i}\right) \cdot C(y, 1, 0)_i\right)\right| \]](https://baylor.ai/wp-content/ql-cache/quicklatex.com-7debb112ae08a65c21b57499530e1209_l3.png)

This sensitivity score gives us an insight into how much each attribute of the input contributes to the output’s sensitivity. The score is then used to estimate the overall sensitivity of the given input and its mask.

![Rendered by QuickLaTeX.com \[ S(x, M)_y = \sum_{i=0}^{n-1} (s(x, y)_i \cdot M_i) \]](https://baylor.ai/wp-content/ql-cache/quicklatex.com-3c630b94595468c74cb4dc591073e4ef_l3.png)

For a complete input, the sensitivity ratio provides a comparative measure of how sensitive the classifier’s output is for an incomplete input versus a complete one.

Results and Implications

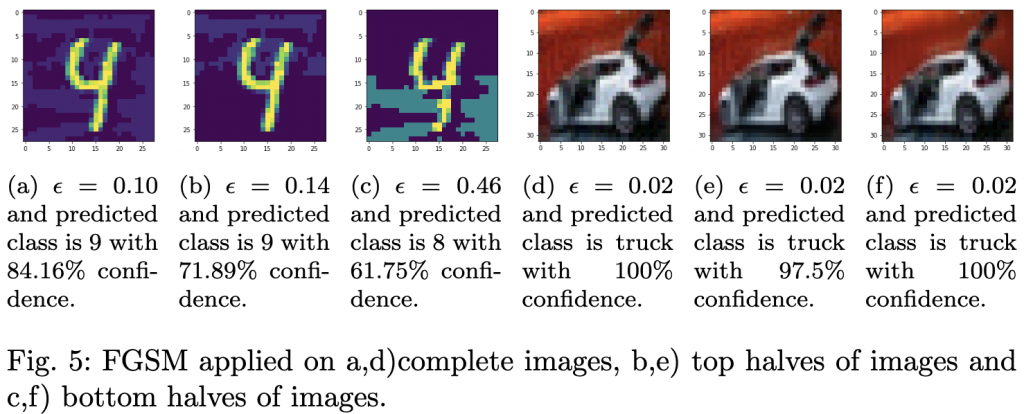

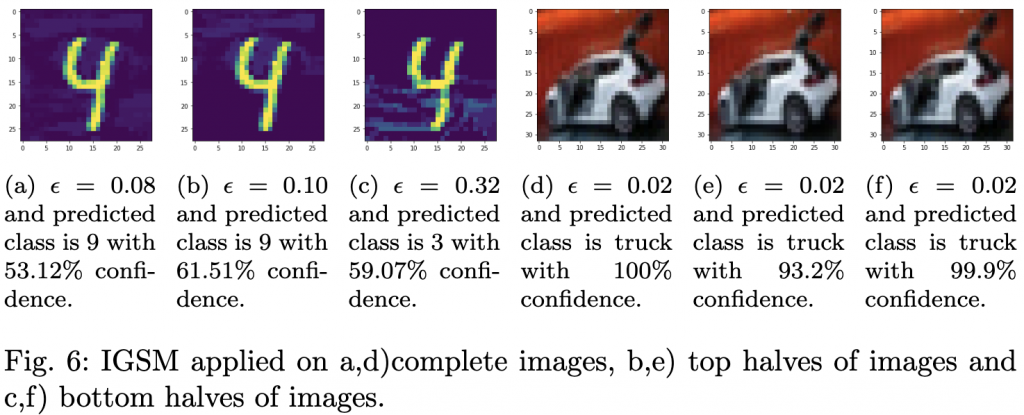

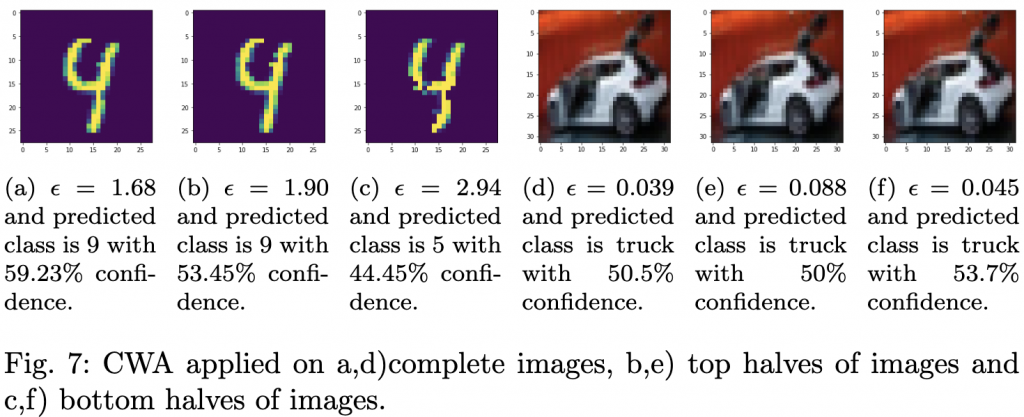

Our focus was on an automobile image from the CIFAR-10 dataset. Interestingly, adversarial examples generated by FGSM and IGSM required the same value of ![]() , which was significantly lower than for other images. This can be attributed to the layer-wise linearity of the classifier. Larger inputs, like the automobile image, require a smaller

, which was significantly lower than for other images. This can be attributed to the layer-wise linearity of the classifier. Larger inputs, like the automobile image, require a smaller ![]() to create an adversarial example. However, JSMA required a higher

to create an adversarial example. However, JSMA required a higher ![]() due to the metric of

due to the metric of ![]() norm.

norm.

Understanding the sensitivity of AI models is paramount in ensuring their robustness against adversarial attacks. The SICEM method provides a comprehensive tool to ensure safer and more reliable AI systems. Read the full paper here [ bib | .pdf ].