In today’s digital age, trust has become a precious commodity. It’s the invisible currency that fuels our interactions with technology and brands. Building trust, especially in technology, is a costly and time-consuming process. However, the payoff is immense. When users trust a system or a brand, they are more likely to engage with it, advocate for it, and remain loyal even when faced with alternatives.

One of the most effective ways to build trust in technology is to ensure it aligns with societal goals and values. When a system or technology operates in a way that benefits society and adheres to its values, it is more likely to be trusted and accepted.

However, artificial intelligence (AI) has faced significant challenges. Despite its immense potential and numerous benefits, trust in AI has suffered. This is due to various factors, including concerns about privacy, transparency, potential biases, and the lack of a clear ethical framework guiding its use.

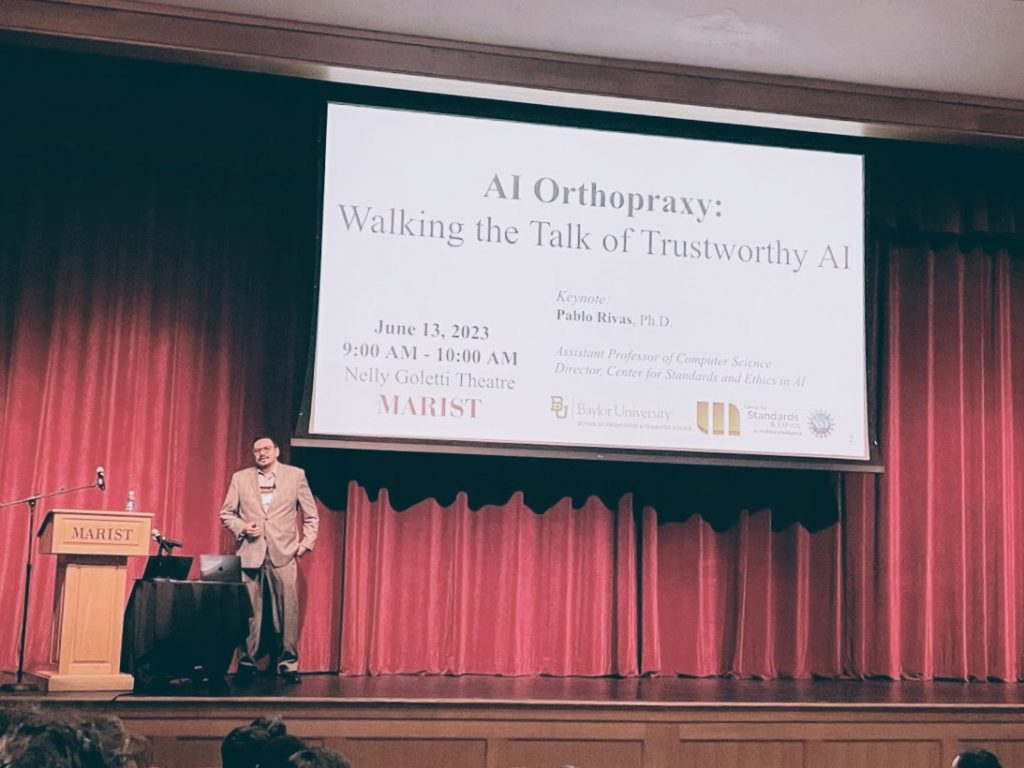

This is where the concept of AI Orthopraxy comes in. AI Orthopraxy is all about the correct practice of AI. It’s about ensuring that AI is developed and used in a way that is ethical, responsible, and aligned with societal values. It’s about walking the talk of trustworthy AI.

In this talk, I will discuss the concept of AI Orthopraxy, the recent developments in AI, the associated risks, and the tools and strategies we can use to ensure the responsible use of AI. The goal is not just to highlight the challenges but also to provide a roadmap for moving forward in a way that is beneficial for all stakeholders.

Large Language Models (LLMs) and Large Multimodal Models: The Ethical Implications

The journey of Large Language Models (LLMs) has been remarkable. From the early successes of models like GPT and BERT, we have seen a rapid evolution in the capabilities of these models. The most recent iterations, such as ChatGPT, have demonstrated an impressive ability to generate human-like text, opening up many applications in areas like customer service, content creation, and more.

Parallel to this, the field of vision models has also seen significant advancements. Introducing models like Vision Transformer (ViT) has revolutionized how we process and understand visual data, leading to breakthroughs in medical imaging, autonomous driving, and more.

However, as with any powerful technology, these models come with their own challenges. One of the most concerning is their fragility, especially when faced with adversarial attacks. These attacks, which involve subtly modifying input data to mislead the model, have exposed the vulnerabilities of these models and raised questions about their reliability.

As someone deeply involved in this space, I see both the immense potential of these models and the serious risks they pose. But I firmly believe these risks can be mitigated with careful engineering and regulation.

Careful engineering involves developing robust models resistant to adversarial attacks and biases. It involves ensuring transparency in how these models work and making them interpretable so that their decisions can be understood and scrutinized.

On the other hand, regulation involves setting up rules and standards that guide the development and use of these models. It involves ensuring that these models are used responsibly and ethically and that there are mechanisms in place to hold those who misuse them accountable.

AI Ethics Standards: The Need for a Common Framework

Standards play a crucial role in ensuring technology’s responsible and ethical use. In the context of AI, they can help make systems fair, accountable, and transparent. They provide a common framework that guides the development and use of AI, ensuring that it aligns with societal values and goals.

One of the key initiatives in this space is the P70XX series of standards developed by the IEEE. These standards address various ethical considerations in system and software engineering and provide guidelines for embedding ethics into the design process.

Similarly, the International Organization for Standardization (ISO) has been working on standards related to AI. These standards cover various aspects of AI, including its terminology, trustworthiness, and use in specific sectors like healthcare and transportation.

The National Institute of Standards and Technology (NIST) has led efforts to develop a framework for AI standards in the United States. This framework aims to support the development and use of trustworthy AI systems and to promote innovation and public confidence in these systems.

The potential of these standards goes beyond just guiding the development and use of AI. There is a growing discussion about the possibility of these standards becoming recommended legal practice. This would mean that adherence to these standards would not just be a matter of ethical responsibility but also a legal requirement.

This possibility underscores the importance of these standards and their role in ensuring the responsible and ethical use of AI. However, standards alone are not enough. They need to be complemented by best practices in AI.

AI Best Practices: From Theory to Practice

As we navigate the complex landscape of AI ethics, best practices serve as our compass. They provide practical guidance on how to implement the principles of ethical AI in real-world systems.

One such best practice is the use of model cards for AI models. Model cards are like nutrition labels for AI models. They provide essential information about a model, including its purpose, performance, and potential biases. By providing this information, model cards help users understand what a model does, how well it does, and any limitations it might have.

Similarly, data sheets for datasets provide essential information about the datasets used to train AI models. They include details about the data collection process, the characteristics of the data, and any potential biases in the data. This helps users understand the strengths and weaknesses of the dataset and the models trained on it.

A newer practice is the use of Data Statements for Natural Language Processing, proposed to mitigate system bias and enable better science in NLP technologies. Data Statements are intended to address scientific and ethical issues arising from using data from specific populations in developing technology for other populations. They are designed to help alleviate exclusion and bias in language technology, lead to better precision in claims about how NLP research can generalize, and ultimately lead to language technology that respects its users’ preferred linguistic style and does not misrepresent them to others.

However, these best practices are only effective if a trained workforce understands them and can implement them in their work. This underscores the importance of education and training in AI ethics. It’s not enough to develop ethical AI systems; we must cultivate a workforce that can uphold these ethical standards in their work. Initiatives like the CSEAI promote responsible AI and develop a workforce equipped to navigate AI’s ethical challenges.

The Role of the CSEAI in Promoting Responsible AI

The Center for Standards and Ethics in AI (CSEAI) is pivotal in promoting responsible AI. Our mission at CSEAI is to provide applicable, actionable standard practices in trustworthy AI. We believe the path to responsible AI lies in the intersection of robust technical standards and ethical solid guidelines.

One of the critical areas of our work is developing these standards. We work closely with researchers, practitioners, and policymakers to develop standards that are technically sound and ethically grounded. These standards provide a common framework that guides the development and use of AI, ensuring that it aligns with societal values and goals.

In addition to developing standards, we also focus on state-of-the-art collaborative AI research and workforce development. We believe that responsible AI requires a workforce that is not just technically competent but also ethically aware. To this end, we offer training programs and resources that help individuals understand the ethical implications of AI, upcoming regulations, and the importance of bare minimum practices like Model Cards, Datasheets for Datasets, and Data Statements.

As the field of AI continues to evolve, so does the landscape of regulation, standardization, and best practices. At CSEAI, we are committed to staying ahead of these changes. We continuously update our value propositions and training programs to reflect the latest developments in the field and to ensure our standards and practices align with emerging regulations.

As the CSEAI initiative moves forward, we aim to ensure that AI is developed and used in a way that is beneficial for all stakeholders. We believe that with the right standards and practices, we can harness the power of AI in a way that is responsible, ethical, and aligned with societal values in a manner that is profitable for our industry partners and safe, robust, and trustworthy for all users.

Conclusion: The Future of Trustworthy AI

As we look toward the future of AI, we find ourselves amidst a cacophony of voices. As my colleagues put it, on one hand, we have the “AI Safety” group, which often stokes fear by highlighting existential risks from AI, potentially distracting from immediate concerns while simultaneously pushing for rapid AI development. On the other hand, we have the “AI Ethics” group, which tends to focus on the faults and dangers of AI at every turn, creating a brand of criticism hype and advocating for extreme caution in AI use.

However, most of us in the AI community operate in the quiet middle ground. We recognize the immense benefits that AI can bring to sectors like healthcare, education, and vision, among others. At the same time, we are acutely aware of the severe risks and harms that AI can pose. But we firmly believe that, like with electricity, cars, planes, and other transformative technologies, these risks can be minimized with careful engineering and regulation.

Consider the analogy of seatbelts in cars. Initially, many people resisted their use. We felt safe enough, with our mothers instinctively extending an arm in front of us during sudden stops. But when a serious accident occurred, the importance of seatbelts became painfully clear. AI regulation can be seen in a similar light. There may be resistance initially, but with proper safeguards in place, we can ensure that when something goes wrong—and it inevitably will—we will all be better prepared to handle it. More importantly, these safeguards will be able to protect those who are most vulnerable and unable to protect themselves.

As we continue to navigate the complex landscape of AI, let’s remember to stay grounded, to focus on the tangible and immediate impacts of our work, and to always strive for the responsible and ethical use of AI. Thank you.

This is a ChatGPT-generated summary of a noisy transcript of a keynote presented at Marist College on Tuesday, June 13, 2023, at 9 am as part of the Enterprise Computing Conference in Poughkeepsie, New York.